Docker

This guide demonstrates how to install and configure Docker and NVIDIA Container Runtime on Jetson Orin series devices.This setup is essential for running GPU-accelerated containers, such as Ollama, n8n, ROS, and other AI inference applications.

1. Overview

- Install Docker CE to support containerized applications

- Configure the NVIDIA runtime to enable GPU acceleration

- Set up non-root (non-

sudo)access to Docker - Set the NVIDIA runtime as the default for persistent usage

This guide covers:

- Docker installation

- NVIDIA runtime configuration

- Runtime validation

- Common troubleshooting

2. System Requirements

| Component | Requirement |

|---|---|

| Jetson Device | Jetson Orin Nano / NX |

| Operating System | Ubuntu 20.04 or 22.04(based on JetPack) |

| Docker Version | Docker CE ≥ 20.10 recommended |

| NVIDIA Runtime | nvidia-container-toolkit |

| CUDA Driver | Included in JetPack(JetPack ≥ 5.1.1 required) |

3. Installation Docker CE

Install Docker from the Ubuntu Official Repository:

sudo apt-get update

sudo apt-get install -y docker.io

⚠️ To install the latest Docker version, consider using the official Docker APT repository.

Verify Docker Installation:

docker --version

# Example:Docker version 20.10.17, build 100c701

4. Run Docker Without sudo(Optional)

To allow a non-root user to run Docker commands:

sudo groupadd docker # Create the docker group (skip if it already exists)

sudo usermod -aG docker $USER

sudo systemctl restart docker

🔁 Reboot or re-login to apply group membership changes:

newgrp docker

5. Install NVIDIA Container Runtime

Install the runtime that enables GPU access from within containers::

sudo apt-get install -y nvidia-container-toolkit

6.Configure NVIDIA Docker Runtime

A. Register NVIDIA as a Docker Runtime

Run the following command to configure the NVIDIA runtime:

sudo nvidia-ctk runtime configure --runtime=docker

Ensure that the NVIDIA runtime is registered as a valid container runtime.

B. Set NVIDIA as the Default Runtime

Edit the Docker daemon configuration:

sudo nano /etc/docker/daemon.json

Paste or confirm the following JSON content:

{

"runtimes": {

"nvidia": {

"path": "nvidia-container-runtime",

"runtimeArgs": []

}

},

"default-runtime": "nvidia"

}

Save and exit the editor.

C. Restart Docker Service

Apply the configuration changes:

sudo systemctl restart docker

Verify that the NVIDIA runtime is active:

docker info | grep -i runtime

Expected output should include:

Runtimes: io.containerd.runc.v2 nvidia runc

Default Runtime: nvidia

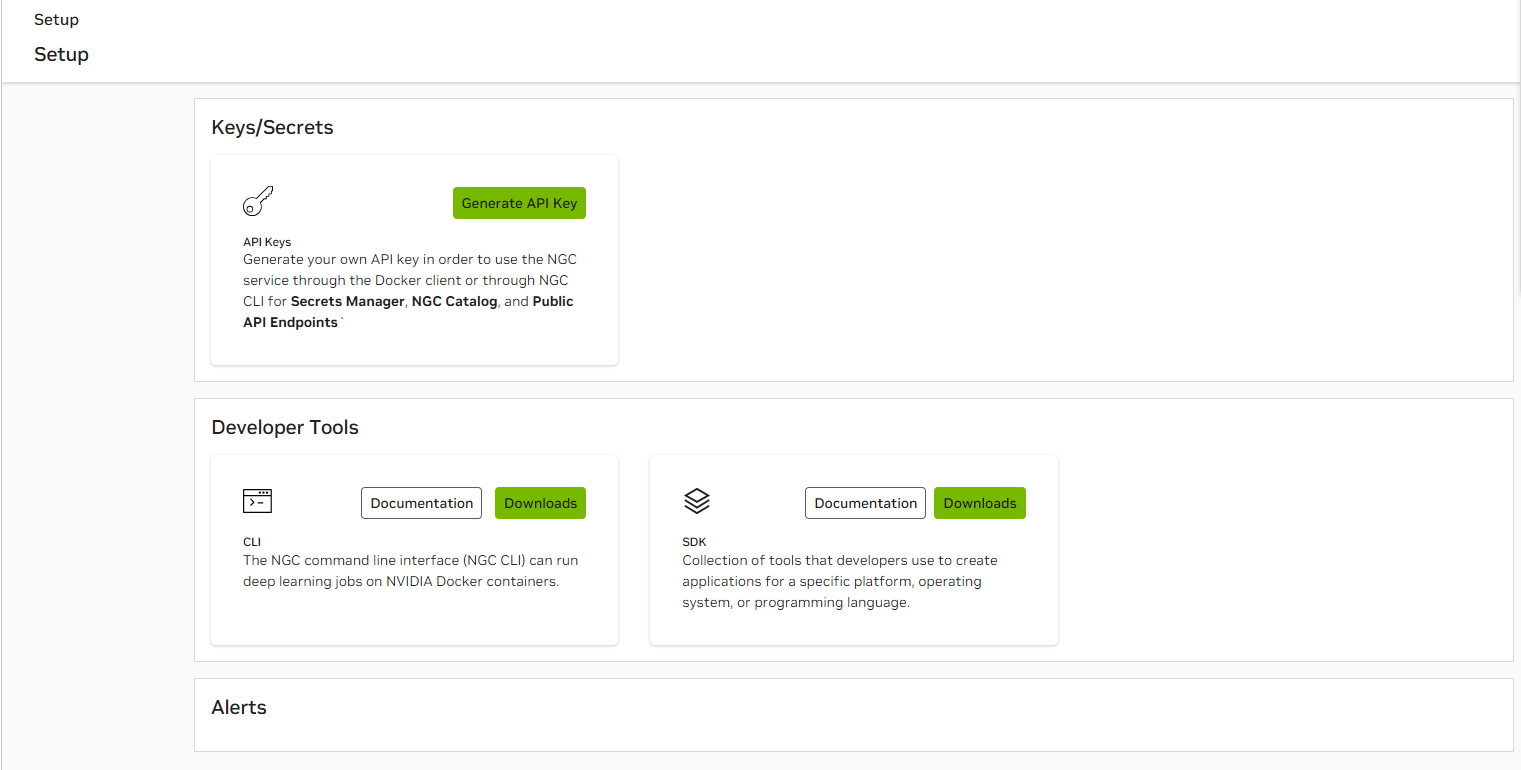

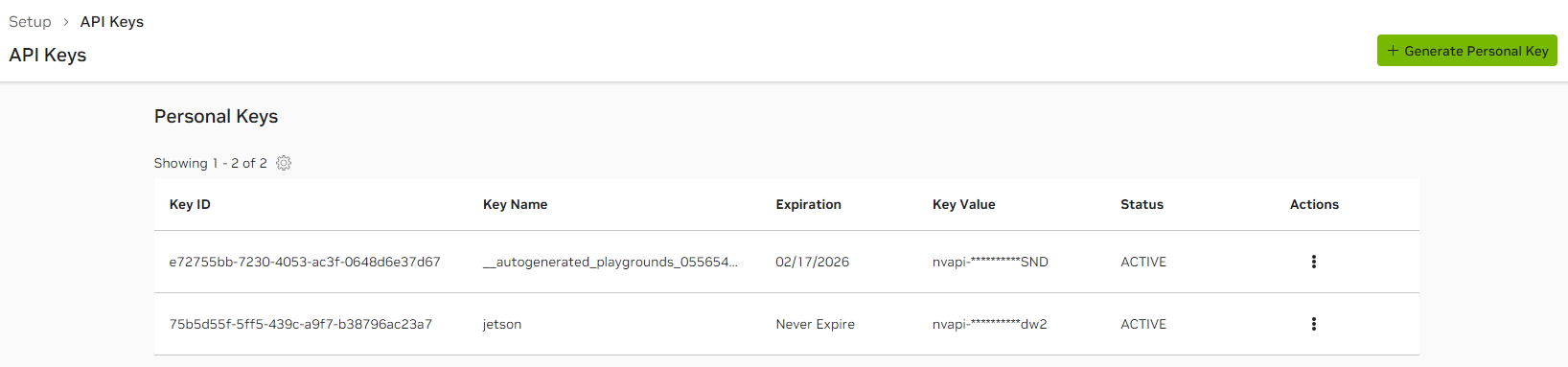

D. Log in to nvcr.io (NVIDIA NGC Container Registry)

Pulling containers from nvcr.io requires a valid NGC_API_KEY

- Generate API Key

- Generate Personal Key

- docker login

sudo docker login nvcr.io

#Username:$oauthtoken

Username: "$oauthtoken"

#Passwordtoken

Password: "YOUR_NGC_API_KEY"

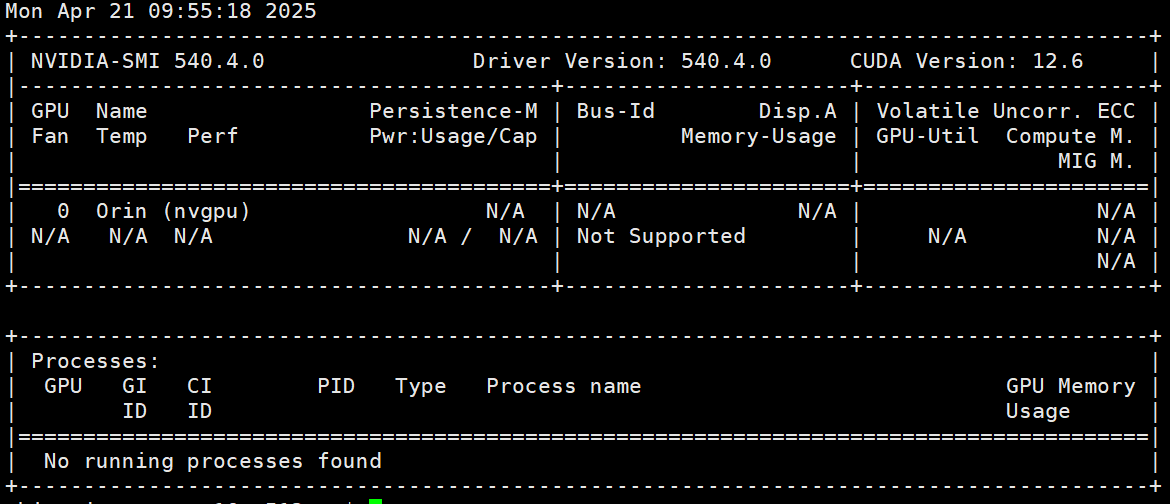

7. GPU Access Test

Run the official CUDA container to verify GPU availability:

docker run --rm --runtime=nvidia nvcr.io/nvidia/l4t-base:r36.2.0 nvidia-smi

Expected Output:

- Displays CUDA version and Jetson GPU details

- Confirms that the container has successfully accessed the GPU

You can also use the community-maintained jetson-containers project to quickly set up your development environment (recommended).

8. Troubleshooting

| Issue | Solution |

|---|---|

nvidia-smi not found | Jetson devices use tegrastats instead of nvidia-smi |

| No GPU access in container | Ensure the default runtime is set to nvidia |

| Permission denied errors | Verify that the user is in thedocker group |

| Container crashes | Check logs viajournalctl -u docker.service |

9. Appendix

Key File Paths

| File | Purpose |

|---|---|

/etc/docker/daemon.json | Docker runtime configuration |

/usr/bin/nvidia-container-runtime | Path to NVIDIA runtime binary |

~/.docker/config.json | (Optional) Docker user-specific config |